Confronting Bias in Artificial Intelligence (AI)

Artificial Intelligence (AI) has crept into every area of our daily lives. These AI systems were created by humans, for humans, while using data that’s highly correlated with humans. Thus, it’s inevitable that AI systems can inherit the biases that humans have already absorbed.

Now, if we must continue dealing with AI systems on such a significant scale, we must confront any possibility of exclusion, unfairness, or discrimination. In this article, we discuss one of the scary aspects of AI usage in human affairs: AI bias. This article will explain everything you need to know about bias in AI, including its types, consequences, detection, and mitigation.

What is AI Bias?

AI bias is a systematic distortion of machine learning (ML) models and algorithms that lead to prejudice for or against a person or group. It’s a terrible situation where the results from an AI system discriminate against a particular group or person, leading to unfair outcomes.

AI bias is sometimes called algorithm bias or machine learning bias. This phenomenon often originates from incorrect assumptions in the ML pipeline, whether in the data pre-processing, algorithm design, or model outcomes.

As humans, we carry biases, both consciously and subconsciously, with us. For instance, we might give favorable treatment to people we consider “good-looking.” Or, we might assume that people who dress a certain way don’t hold a 9-5 job before we even find out if they do. Stereotypes like these are part of the data and prompts that are fed into AI systems.

When AI systems reinforce stereotypes and produce skewed judgments, they become untrustworthy. But with AI shaping our future and automating critical decision-making processes, we can not afford a mistrust. That’s why AI researchers are focused on producing better-performing systems that produce fair, equal, and inclusive results.

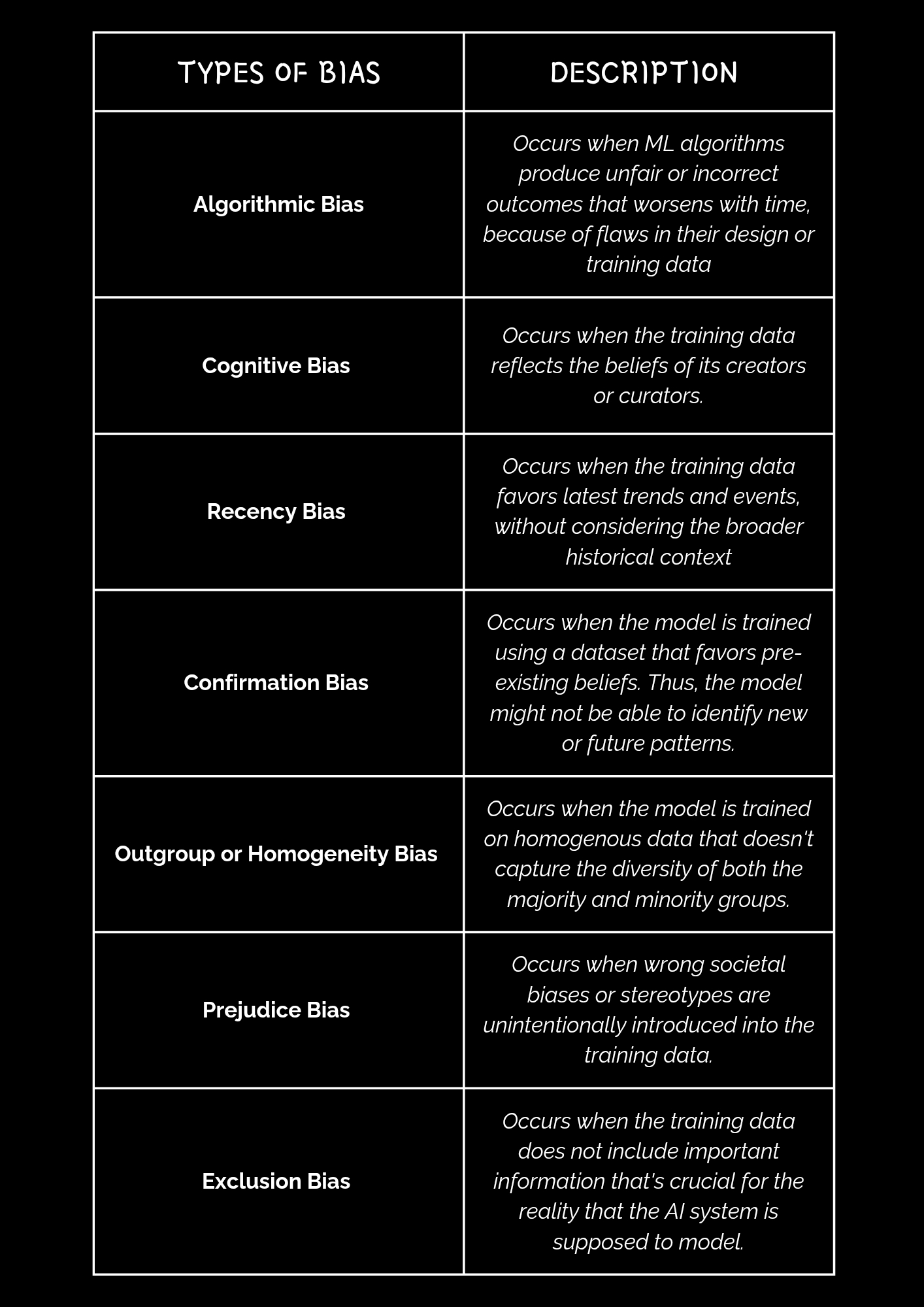

Types of Bias in AI

Photo by Author

Below are the most common types of AI biases:

-

Algorithmic Bias

Algorithmic bias occurs when ML algorithms produce unfair or incorrect outcomes that worsen with time because of flaws in their design or training data. This bias isn’t always intentional, but its impact can be significant.

-

Flaws in the algorithm design: An AI practitioner may intentionally or unintentionally give more importance to certain features or attributes of the dataset while designing and implementing the algorithm. This can lead to discrimination or underrepresentation of certain groups. For example, if a loan approval algorithm heavily relies on only income levels without considering other factors, it might unfairly reject applications from people in lower-income groups.

-

Flaws in the training data: If the data a model learned from is biased, the model will likely replicate this bias in its decisions. For example, if a hiring algorithm was trained on past data that favored one gender over another, it might continue to favor that gender even if it’s not explicitly told to do so.

-

Cognitive Bias

As mentioned earlier, we inherently carry biases with us as humans. These biases can slip into a training dataset without the data scientists or AI engineers realizing it. When this happens, whether through the data selection process or how the data is weighted, it results in a type of AI bias known as cognitive bias. Cognitive bias in AI occurs when the training data reflects the beliefs of its creators or curators.

For example, a data scientist might be working on a model for recommending personalized learning paths for students. If the person assumes all students can access advanced technology and resources in their schools, they might slip this belief into the training data. This can result in a model that may recommend online simulations or advanced software to students in underfunded schools.

-

Recency Bias

Sometimes, a dataset influenced by recent events can lead to a cognitive bias known as recency bias. This occurs when the training data favors the latest trends and events without considering the broader historical context.

For example, if the training dataset of a stock prediction model only contains information on the current hottest stocks in the market, the model might place disproportionate weight on the recent performance of the stocks. This means the model might be unable to accurately identify patterns and predict future stock prices because it can not access historical information on the stocks.

-

Confirmation Bias

Confirmation bias is the opposite of recency bias. In this case, the model is trained using a dataset that favors pre-existing beliefs. Thus, the model might be unable to identify new or future patterns. For example, if a fashion trend prediction model is trained using data from previous seasons, it might overlook new styles gaining popularity because it is biased toward the historical trends it has already learned.

-

Outgroup Homogeneity Bias

Outgroup homogeneity bias in AI occurs when the model is trained on homogenous data that doesn’t capture the diversity of both the majority and minority groups. This means the dataset focuses more on the characteristics of the majority group without considering the subtle differences present in minority groups.

Homogeneity bias is often a result of the AI engineer’s lack of exposure to diverse groups (outgroups) outside their own (ingroup), leading to racial bias or unfair outcomes. For example, a model that assesses students’ writing might be trained primarily on essays by native English speakers. Thus, the model may consider unique phrasing or structures from non-native speakers as errors and unfairly score them.

-

Prejudice Bias

This type of bias in AI stems from wrong societal biases or stereotypes that are unintentionally introduced into the training data. When AI systems are trained on datasets that contain historical biases, they can replicate and amplify these biases.

For example, if a recruitment algorithm is trained on data where women have historically been underrepresented in engineering roles, the AI might favor male candidates, supporting the stereotype that men are superior for such jobs.

-

Exclusion Bias

This type of bias occurs when the training data does not include important information crucial to the reality that the AI system is supposed to model. Exclusion bias often happens during the data preprocessing stage when a researcher decides to remove or keep certain data points.

For example, a researcher might exclude attendance data while working on a student success prediction model. However, attendance might actually be a key predictor of student performance.

Ultimately, just because AI systems are fed millions of data doesn’t automatically make them neutral or trustworthy. The humans behind these datasets can still highly influence them.

Consequences of Biased AI systems

AI biases are often unintentional and can be difficult to notice because they are ingrained into our society and may seem “normal.” It’s only when we start seeing the consequences of these biases that we truly understand the implications of their existence. Below are some of such consequences:

-

Unfair treatment

Biased AI systems can limit the access of marginalized groups to essential services. For instance, a model trained on a medical dataset that under-represents women in the healthcare sector can make it harder for female patients to get appointments. This can lead to unequal access to healthcare and potential harm to women.

-

Discrimination

Bias in AI can amplify existing inequalities in our society. For example, in the criminal justice system, a biased algorithm can lead to the discrimination of people of color. They could be more likely to be arrested or receive harsher sentences.

-

Technology mistrust

When users can’t trust an AI system to serve everyone’s needs regardless of race, gender, age, socioeconomic background, or other characteristics, they won’t want to be associated with the technology.

Being aware of the different biases in AI and their consequences for individuals and society at large is the first step to building more equitable models. The second step is to detect these biases early in the development stage of the AI system and reduce their negative effects.

How to Detect Bias in AI

Photo by Author

Photo by Author

To detect bias in AI systems, researchers and practitioners always ask these three critical questions:

-

Was the model trained with diverse datasets?

A model with a homogenous training dataset will likely produce biased results. So, it’s essential to check if the model was trained with diverse and representative data to ensure fair treatment across various demographic groups. When every group is represented in a dataset, the model is likely not to favor one over the other.

-

Is the model’s judgement fair?

If the outcomes from a model are unjustly favoring or discriminating against certain groups, then it’s an unfair model that’s promoting human biases. A fair model produces fair results and makes equitable decisions that represent different demographic groups.

-

Is the model transparent?

A transparent model clearly explains every decision it makes and the reasoning behind every outcome, making it easier to detect hidden biases. Transparent models also help both technical and non-technical users understand AI systems.

How To Reduce Bias in AI

Mitigating AI bias is a complicated challenge because it’s sometimes difficult to identify and isolate the different sources of bias in AI since they can arise from the data, the algorithm, and the user. However, below are some of the approaches AI researchers have proposed for preventing AI systems from promoting negative human bias:

-

Using diverse data collection

As stated earlier, a diverse dataset is vital for creating equitable AI systems. Therefore, data should be pre-processed to identify and address biases before training the model. This can be achieved by using evenly distributed datasets that represent every group being considered. Meanwhile, it’s also vital to regularly retrain the algorithm with updated datasets that reflect current societal changes to avoid confirmation or prejudice biases.

-

Building transparent AI systems

Transparent AI systems provide clear explanations of a model’s input, training data, and the logic behind its decisions. This information reveals whether the system’s decision is biased and when a model deviates from the intended outcomes. When users know how a system arrived at its conclusion, it builds their trust in the system. However, building transparent models can be challenging for black-box systems such as Large Language Models (LLMs), where the training data is private and the behavior of the algorithms cannot be well documented.

-

Using human reviewers

Also known as a “human-in-the-loop” system, human reviewers can find biases that AI systems may easily overlook. Organizations should assemble a team during the AI development and evaluation that assess every decision an AI system makes before approving. For this process to yield meaningful results, the review team should include people with different backgrounds, such as race, gender, and educational level. This diversity ensures the model is reviewed from different perspectives, and its potential for bias is considered from all angles.

-

Continuous Model Monitoring

AI models are not static. They evolve and interact with real-world data and biased users, which can introduce them to new biases over time. Monitoring and testing these models is crucial to identify and address potential issues before they cause harm. This approach involves periodically evaluating the model’s performance with real-world data and making necessary adjustments to maintain fairness and accuracy.

Finally, mitigation approaches for AI bias are still a work in progress that’s continuously undergoing constant research and development. In the meantime, these strategies shared above can help organizations take meaningful steps toward ensuring fairness, transparency, and inclusivity in AI development.

Conclusion

This article has explained the various sources of AI biases, their profound societal impact, and some strategies for combating them. It’s clear that if AI systems are not delicately designed, they could become a sociotechnical disaster. Thus, as we look to the future, we all need to be part of the conversation because AI is becoming the cornerstone of technology in every sector, and it should work for everyone. We must remain vigilant in safeguarding this technology against subtle yet harmful biases.

#AI bias

#algorithm bias

#machine learning bias

#artificial intelligence bias

#AI discrimination

#algorithmic fairness

#AI ethics

#bias in artificial intelligence

#AI bias detection

#AI bias mitigation

Praise James

Technical Content Writer & Storyteller